The Challenge: AI That Understands India

India’s broadcast and media industry is in the middle of a transformation that goes far beyond better cameras, faster workflows, or smarter editing tools. At BES EXPO 2026, amid discussions on regulation, platforms, and AI-powered production, one message stood out for its depth and long-term implications.

When Prof. Ganesh Ramakrishnan spoke, he was not talking about AI trends.

He was talking about AI foundations.

And more importantly, he was talking about who controls them.

This was not a technical lecture. It was a quiet but powerful statement on how India must think about artificial intelligence if it wants its media, governance, and public information systems to remain credible, inclusive, and sovereign in the decades ahead.

The real question India must answer

The underlying question Prof. Ganesh raises is simple, but uncomfortable:

Should India merely use AI, or should India own the intelligence it depends on?

For a country with over a billion people, hundreds of languages, and one of the world’s largest media ecosystems, this question is not theoretical. It directly affects how news is produced, how citizens receive information, how public trust is maintained, and how culture is preserved.

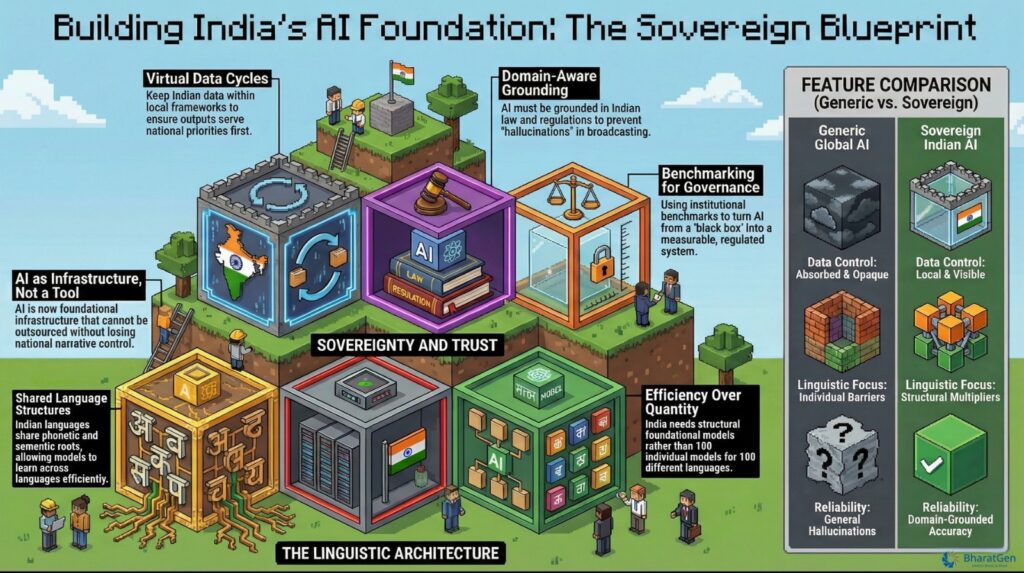

AI, in his framing, is no longer a tool. It is becoming infrastructure.

And infrastructure cannot be outsourced blindly.

India’s languages are not a liability. They are the advantage.

For years, India’s linguistic diversity has been described as an AI challenge. Too many languages. Too many dialects. Too much complexity.

Prof. Ganesh flips this narrative entirely.

He points out that Indian languages are not random or disconnected. They share deep grammatical, phonetic, and semantic structures. When modeled intelligently, these similarities allow AI systems to learn across languages instead of treating each one as a separate problem.

This means something profound:

India does not need 100 different AI models for 100 languages.

India needs foundational models that understand Indian language families at a structural level.

Once this foundation exists:

- Speech-to-text becomes scalable across regions

- Translation improves without losing cultural nuance

- Content discovery reaches audiences previously left out

- Public broadcasting becomes truly inclusive

Language stops being a barrier and becomes a multiplier.

Sovereign AI is about control, not isolation

One of the most important themes in Prof. Ganesh’s message is data sovereignty, but it is not framed emotionally or politically.

It is framed operationally.

Today, vast amounts of Indian language data flow into global AI systems. Once absorbed, this data is:

- No longer visible

- No longer controllable

- No longer aligned to Indian priorities

This creates a silent dependency.

Prof. Ganesh argues for what he calls virtual data cycles:

- Indian data stays within Indian research and institutional frameworks.

- Models are trained, tested, improved, and benchmarked locally.

- Outputs serve Indian media, governance, and industry needs first.

This is not about cutting India off from the world.

It is about ensuring India does not lose ownership of its own voice.

Why generic AI fails in media, governance, and public information

A recurring problem with large, generic language models is hallucination. In entertainment, that may be tolerable. In broadcasting, journalism, law or disaster communication, it is not.

Prof. Ganesh is clear on this point:

AI used in public-facing systems must be grounded in domain reality.

That means:

- Financial AI must understand Indian regulations and behaviors

- Legal AI must reflect Indian law and jurisprudence

- Healthcare AI must align with Indian medical workflows

- Media AI must respect editorial integrity and factual accuracy

Instead of chasing ever-larger models, his focus is on right-sized, domain-aware AI that can be trusted in real-world deployments.

For broadcasters and media houses, this distinction is critical. Speed without correctness destroys credibility. AI must strengthen trust, not weaken it.

Benchmarks are the missing piece in the AI conversation

One of the least glamorous but most consequential parts of Prof. Ganesh’s work is benchmarking.

He explains that India is building institutional benchmarks to evaluate AI models across:

- Languages

- Domains

- Reasoning ability

- Task execution

- Error rates and interpretability

These benchmarks are being applied to models with up to one billion parameters, not to chase size, but to measure usefulness.

The implication is powerful:

If India cannot measure AI meaningfully, it cannot regulate it, trust it, or deploy it at scale.

Responsible AI is engineered, not declared

Rather than treating ethics as an afterthought, Prof. Ganesh embeds responsibility into system design.

His approach emphasizes:

- Human-in-the-loop workflows

- Transparent decision-making

- Interpretable outputs over opaque intelligence

This is especially relevant for:

- Public broadcasters

- Government platforms

- Citizen-facing services

In such systems, responsibility is not optional. It is what allows AI to exist without eroding public trust.

Why collaboration is non-negotiable

Another subtle but important point in his message is the role of collaboration.

He does not position academia as separate from industry. On the contrary, he stresses that:

- Research must be shaped by deployment realities

- Industry feedback must inform model design

- Media organizations are not just users, but co-creators of applied AI

For platforms like public broadcasters, OTT services, and digital newsrooms, this opens a path to AI systems that are built with them, not imposed on them.

How this fits into the larger BES EXPO 2026 narrative

At BES EXPO 2026, many speakers spoke about:

- AI-powered production

- Regulatory reform

- New platforms and distribution models

Prof. Ganesh addressed the layer beneath all of it.

Without indigenous, multilingual, domain-aware AI foundations:

- Media innovation becomes fragile

- Regulation becomes reactive

- Trust becomes harder to sustain

- Dependency becomes inevitable

His message can be summed up simply:

If broadcasting is the voice of the nation, AI must understand the nation before it speaks for it.

Why this matters now

India’s media and broadcast ecosystem touches hundreds of millions of people every day. As AI becomes embedded in content creation, discovery, moderation, and archiving, the decisions made now will shape:

- Who controls narratives

- How inclusive media truly is

- How resilient public trust remains

Prof. Ganesh’s intervention at BES EXPO 2026 is a reminder that the future of Indian media will not be decided by tools alone, but by foundations.

The conclave reinforced a clear national consensus:

Source: PM Modi LinkedIn