The Silent Crisis in Agricultural AI

In India’s vast agricultural landscape, where 600 million farmers cultivate crops that feed 1.4 billion people, a critical technological gap persists. While artificial intelligence promises to revolutionize farming through precision agriculture, crop monitoring, and intelligent advisory systems, current AI models are failing dramatically when it comes to understanding the nuanced, domain-specific knowledge that Indian agriculture demands.

This isn’t just about translation or language barriers—it’s about AI systems that can’t distinguish between rabi and kharif crops, don’t understand soil health parameters specific to Indian conditions, or fail to grasp the intricate relationship between monsoon patterns and agricultural practices that have evolved over millennia.

The introduction of BhashaBench-Krishi marks a watershed moment in agricultural AI evaluation, providing the first comprehensive benchmark grounded in government agricultural exams and specifically tailored to Indian agricultural knowledge. The results highlight significant performance gaps that could determine whether digital tools enable successful transformation or risk leaving millions of farmers behind.

Why Current AI Benchmarks Miss the Mark

The Agriculture Knowledge Desert

While a number of specialized agricultural AI benchmarks have emerged in recent years—such as AgriBench/MM-LUCAS (EU field image classification, 2024), BVL QA Corpus (German agricultural QA, 2024), AgXQA (Irrigation-focused extractive QA, 2024), CROP Benchmark (rice and corn MCQs, 2024), AgEval (stress identification and quantification, 2024), AgroEvals with AgroGPT (expert-tuned dialogues, 2024), SeedBench (breeding and gene–trait reasoning, 2025), CDDM and CDwPK-VQA (multimodal crop disease diagnosis, 2024–2025), WheatRustVQA (rust severity detection, 2024), Krishiq-BERT (Kannada agricultural QA, 2024), Embrapa Dairy QA (Portuguese dairy queries, 2022), and Agronomy MCQ 98 (general MCQs, 2024)—none fully capture the context-rich, region-specific agricultural knowledge required for real-world farming in India.

These benchmarks represent important progress, but each has clear limitations when applied to India’s agricultural context:

- Geographic mismatch – Many focus on EU, Chinese, or South American crops and practices, with little to no relevance for Indian agro-ecological zones.

- Narrow crop scope – Often restricted to a handful of globally important crops (e.g., rice, wheat, maize) rather than India’s 50+ major crops and thousands of local varieties.

- Modality or task isolation – Strong on image recognition, MCQ answering, or disease classification in isolation, but rarely integrate policy knowledge, scheme eligibility, or seasonal agronomy.

- Language limitations – Predominantly English or a single non-Indian language; lack of bilingual (English + Indian languages) coverage.

- Exam disconnect – Few, if any, are grounded in official government agricultural examination questions, which are the closest standardized proxy for real-world farmer advisory competence.

Even broader AI benchmarks like MMLU, MILU, or Sanskriti suffer from the same flaw: agricultural questions, if included, focus on generic scientific principles rather than the nuanced, practice-oriented decisions farmers face.

Example contrast:

- Traditional Benchmark Question (Factoid / Static Knowledge)

Question:

“What is the chemical formula for photosynthesis?”

(Simple factual recall, textbook-based) - BhashaBench-Krishi Question (Domain-Specific, Contextual Understanding)

Question (in Hindi):

“निम्नलिखित में से कौन-सा कथन मृदा जल विभव और दोमट, बलुई तथा चिकनी मिट्टी के नमी अंश के बीच सही संबंध को स्पष्ट करते हैं?”

(Which statement correctly explains the relationship between soil water potential and moisture content in loamy, sandy, and clay soils?) - Options:

1. दिए गए विभव पर दोमट मृदाओं की अपेक्षा चिकनी मिट्टियाँ अधिक जल रोकती हैं।

2. दिए गए विभव पर चिकनी मिट्टियों की अपेक्षा ____दोमट मृदाएँ अधिक जल रोकती हैं।

3. दिए गए विभव पर बलुई मृदाओं की अपेक्षा दोमट मृदाएँ अधिक जल रोकती हैं।

4. दिए गए विभव पर दोमट मृदाओं की अपेक्षा चिकनी मिट्टियाँ कम जल रोकती हैं।

Why This Matters

The traditional question tests static, memorized facts (chemical formula) that don’t change with context or environment.

The BhashaBench-Krishi question, by contrast:

- Requires understanding soil physics and water retention differences across soil types (loamy, sandy, clayey).

- Tests applied agricultural knowledge crucial for irrigation, crop management, and soil conservation.

- Reflects real-world farming challenges and decision-making, impacting crop yield and sustainability.

- Is posed in Hindi, catering to regional language understanding for Indian farmers and agronomists.

The Scale of India’s Agricultural Challenge

India’s agricultural sector introduces complexities that cannot be solved by generic or translated datasets:

- 15 major agro-climatic zones, each with unique crop patterns

- Over 50 major crops, with region-specific varieties and practices

- Seasonal diversity across Kharif, Rabi, and Zaid

- State-specific policies influencing crop planning and subsidies

- Integration of indigenous and modern techniques in farming practice

- Hundreds of government schemes with varying eligibility

- Dynamic market factors such as MSP, procurement policies, and export restrictions

This complexity demands authentic, exam-validated benchmarks that measure an AI’s ability to provide actionable, localized, and policy-aware agricultural advice—not just its ability to recite generalized agronomy facts.

BhashaBench-Krishi: An Agricultural AI Benchmark

Comprehensive Coverage Across Agricultural Domains

BhashaBenchmark-Krishi is part of the BhashaBenchmark series and stands as the most extensive agricultural knowledge evaluation benchmark ever created for Indian languages—rigorously designed for authenticity, coverage, and depth.

Currently, it supports two major languages: English and Hindi, with multiple additional Indic languages planned for upcoming versions.

Metric | Count | Details |

Government Exams | 55+ | 55+ unique agricultural exams, from grassroots rural development to cutting-edge research |

Subject Domains | 25+ | Covering more than 270+ distinct topics across agricultural and allied sciences |

Total Questions | 15,405 | Rigorously validated and categorized |

English Questions | 12,648 | Comprehensive coverage across all domains |

Hindi Questions | 2,757 | Culturally authentic regional content |

From Field to Research Lab – Exam Spectrum

These 55+ unique agricultural exams span a complete continuum of knowledge and skills:

- Entry Level – Rural Outreach & Extension

NABARD Grade A, MP RAEO, NSCL recruitment — focusing on rural development, community engagement, and farmer advisory work. - Applied Agriculture & Agribusiness

NFL Management Trainee, IBPS-AFO — bridging farming, markets, and industry applications. - Postgraduate Entry

ICAR AIEEA PG — wide syllabus coverage for MSc-level agricultural disciplines. - Advanced Research Specializations

ICAR AICE PhD — high-level focus areas like Genetics, Plant Pathology, Seed Science. - University & Fellowship Programs

JRF fellowships, university-specific PG/PhD entrance exams in Agronomy, Horticulture, and Biotechnology. - National Recruitment & Scientist Roles ASRB scientist recruitment, Pre-PG tests – mapping the journey from grassroots skills to scientific innovation.

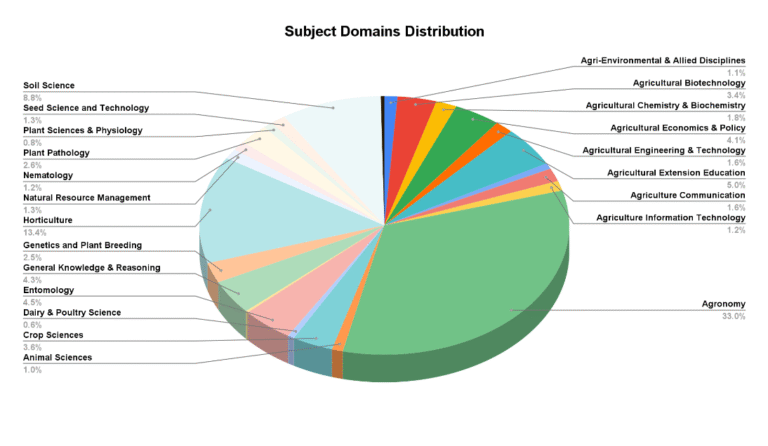

Breadth of Subject Domains

More than 25+ domains and 270+ topics, covering the entire agricultural and allied sciences ecosystem:

- Core Agricultural Sciences – Agronomy, Soil Science, Crop Sciences, Plant Pathology, Entomology, Nematology, Genetics & Plant Breeding.

- Advanced & Applied Fields – Agricultural Biotechnology, Seed Science & Technology, Plant Physiology, Agricultural Engineering & Technology (mechanization, irrigation, remote sensing).

- Allied Life Sciences – Animal Sciences, Veterinary Sciences, Dairy & Poultry Science, Fisheries & Aquaculture.

- Supporting & Interdisciplinary Disciplines – Agricultural Microbiology, Chemistry & Biochemistry, Agricultural Extension Education.

- Economic & Digital Agriculture – Agricultural Economics & Policy, Agriculture Information Technology.

- Skill & Awareness Areas – General Knowledge & Reasoning, Agriculture Communication, Agri-Environmental & Allied Disciplines (sustainability, climate-smart practices)

Question Difficulty Distribution:

The benchmark questions are categorized into three difficulty levels to comprehensively evaluate model performance across varying complexities.

- Easy: A substantial portion of the dataset (6,754 questions) consists of straightforward questions designed to test basic agricultural knowledge and facts.

- Medium: The largest category, with 6,941 questions, challenges deeper understanding, requiring interpretation and application of agricultural concepts in practical contexts.

- Hard: The most challenging set includes 1,710 questions that demand advanced reasoning, multi-step problem solving, or integration of diverse knowledge areas relevant to farming practices.

Question Type Breakdown:

The dataset contains a diverse variety of question formats to assess different cognitive skills and reasoning abilities.

- Multiple Choice Questions (MCQ): The majority of questions (13,550) are traditional MCQs that evaluate knowledge recall and application.

- Assertion–Reasoning: 648 questions require evaluating the correctness of statements and their logical relationships, testing analytical thinking.

- Match the Column: 949 questions ask for pairing related items, encouraging understanding of relationships between concepts.

- Rearrange the Sequence: 209 questions assess the ability to order steps or processes correctly, which is critical for procedural knowledge in agriculture.

Fill in the Blanks: 49 questions focus on recall of specific terms or values, emphasizing precise knowledge.

Methodology: From Government Exams to AI Benchmark

Stage 1: Authentic Data Collection

Our process begins with thorough sourcing of authentic agricultural exam content from trusted government and institutional sources:

- Official Exam Portals: Including ICAR, NABARD Grade A, MP RAEO, NSC and various state agricultural universities

- Government Publications: Agricultural department question banks and official releases

- Institutional Sources: Agricultural colleges and research institutes

- Verification: Cross-referencing questions across multiple official sources to ensure authenticity and accuracy with domain expert validation.

Stage 2: Advanced OCR Processing

To digitize physical exam papers, we employ state-of-the-art OCR technology tailored for Indian agricultural content:

- OCR Model: Surya, chosen for its superior recognition of Indian languages and technical terms

- Multi-Script Handling: Robust processing of English, Hindi, and agricultural terminology

- Format Preservation: Careful retention of question structure, numbering, and formatting integrity

Stage 3: AI-Powered Post-Correction

We apply large multilingual language models to enhance the textual quality and correctness of digitized content:

- Correction Model: Qwen3-235B, selected for its exceptional multilingual and domain-specific understanding

- Context-Aware Correction: Ensuring accurate interpretation of agricultural jargon and technical content

- Quality Validation: Multiple iterative correction passes combined with domain-specific consistency checks

Stage 4: Intelligent Topic Classification

Our classification approach combined official exam metadata with advanced language model capabilities to create a meaningful topic hierarchy:

- Source-Based Topic Extraction: Questions were initially tagged using the exact subjects and sections from official government exam papers, ensuring authentic curriculum-aligned labels.

- LLM-Guided Domain Consolidation: We employed the Qwen3-235B model to analyze and group multiple related exam topics into broader, semantically coherent agricultural domains. For example, topics like “Crop Physiology,” “Crop Nutrition,” and “Crop Protection” were consolidated under the broader domain “Crop Sciences.”

- Hierarchical Topic Structure: This produced a two-level classification system, mapping detailed exam sections into 25+ higher-level agricultural domains, facilitating both granular and summary analyses.

- Accuracy and Validation: The Qwen3-235B model achieved an accuracy of 93% in correctly assigning domains, as measured on a manually verified validation set. Domain experts further reviewed and refined the classifications to ensure domain relevance and quality.

This method enabled scalable, precise, and semantically meaningful topic organization tailored for the agricultural benchmark.

Stage 5: Linguistic and Cultural Expert Validation

A detailed manual review focused on language quality, cultural relevance, and regional appropriateness to ensure the questions are clearly understandable and contextually accurate:

- Expert Team: 30 agricultural language specialists reviewed the questions for linguistic clarity, technical terminology correctness, and cultural sensitivity.

- Multi-Layer Quality Assurance: Checks included grammar, translation accuracy, and alignment with regional farming contexts across diverse agro-climatic zones.

- Verification Accuracy: This initial validation achieved approximately 85% accuracy, with the remaining 15% of questions corrected by the language experts to meet quality standards.

Stage 6: Agricultural Domain Specialist Evaluation

A separate, focused evaluation conducted by senior agricultural professionals to verify the technical correctness and practical relevance of the benchmark questions:

- Domain Specialists: About 10 seasoned agricultural scientists and practitioners assessed the questions for scientific accuracy and real-world applicability.

- Validation Outcome: Roughly 96% of the questions were confirmed as technically valid and relevant, ensuring the benchmark’s strong alignment with agricultural knowledge and practice.

Results: AI’s Agricultural Knowledge Gap

Overall Model Performance

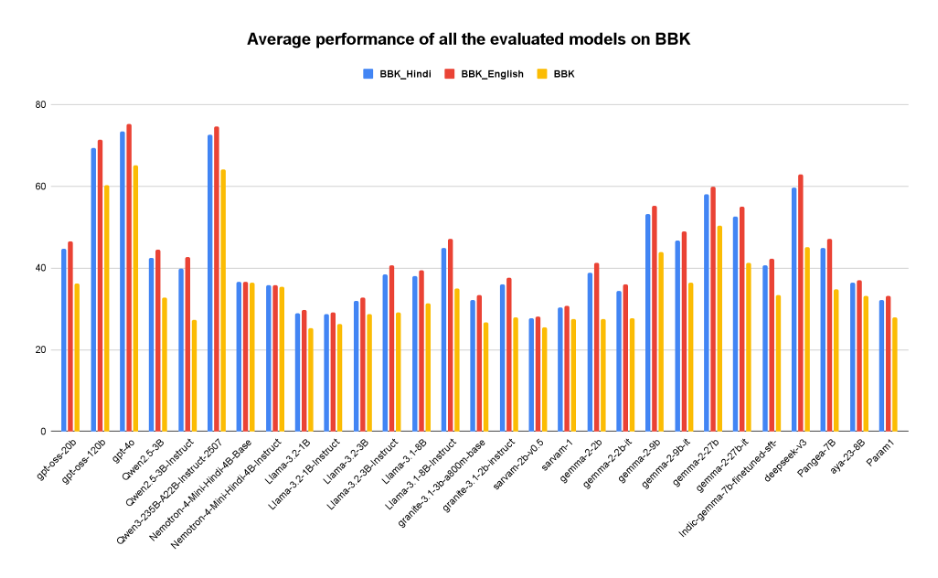

The evaluation of 29+ language models on the BhashaBench-Krishi agricultural benchmark reveals notable variation in performance:

- Higher-performing models such as GPT-4o, Qwen3-235B-A22B-Instruct-2507, and Qwen-oss-120b consistently achieve the best accuracy, reaching scores above 70% on the overall benchmark and English subset.

- The Hindi subset sees generally lower scores across models, with top performers scoring around 60–65%, indicating challenges remain for regional language understanding.

- Lower-performing models cluster around the 25–40% range, showing limited domain knowledge or language adaptation for agriculture.

Overall, these results highlight a clear gap in AI’s agricultural domain expertise, especially in multilingual contexts, with room for further improvement across most models

Domain-Wise Performance Disparities in Agricultural AI

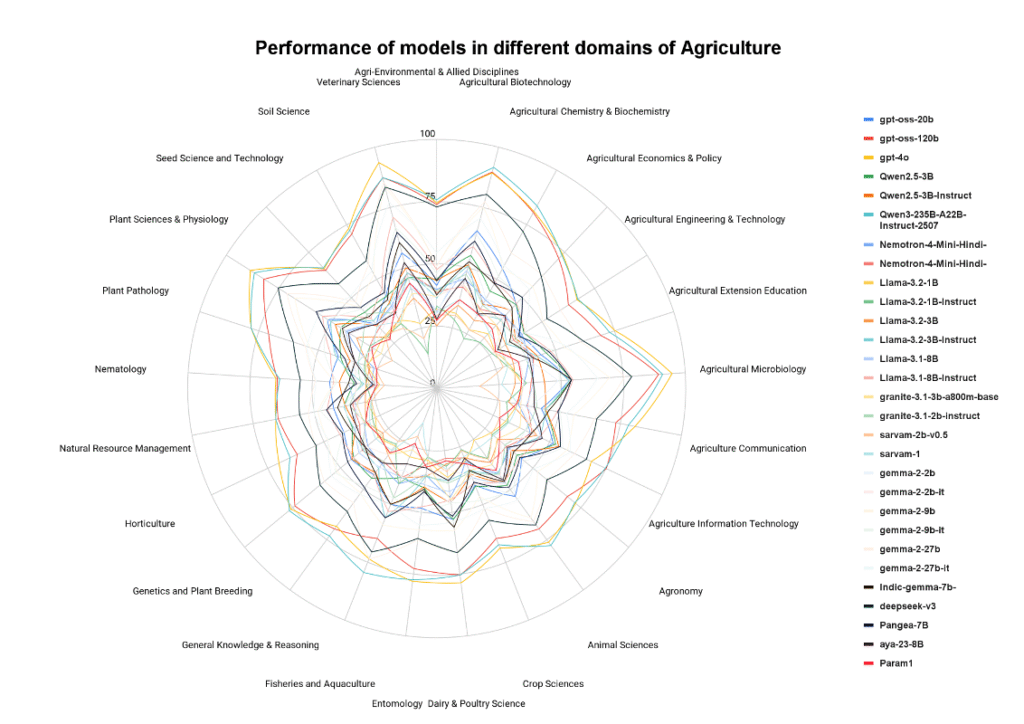

The benchmark results reveal significant variation in model performance across different agricultural subject domains, highlighting areas of strength and weakness in current AI capabilities:

Highest Performing Domains

- Agricultural Biotechnology, Plant Sciences & Physiology, and Veterinary Sciences demonstrate consistently strong model accuracy, often exceeding 80% in top models. These domains likely benefit from well-established scientific knowledge and standardized terminology.

- Agricultural Chemistry & Biochemistry and Agronomy also see strong performance, reflecting robust documentation and universal concepts.

Moderate Performance Domains

- Domains such as Soil Science, Agricultural Engineering & Technology, and Animal Sciences show moderate accuracy in the 40-70% These fields often combine universal scientific principles with regional and practical knowledge, posing moderate challenges for AI models.

- Agricultural Extension Education and Agriculture Communication score in the mid-range, indicating difficulty in modeling culturally nuanced or communication-heavy content.

Lowest Performing Domains

- Agri-Environmental & Allied Disciplines, Nematology, and Regional Crop Management see relatively lower scores (often below 40-50%), reflecting the complexity and location-specific expertise required.

Domains involving Rural Development and Policy Understanding are also challenging, as they require contextual and social knowledge beyond textbook facts.

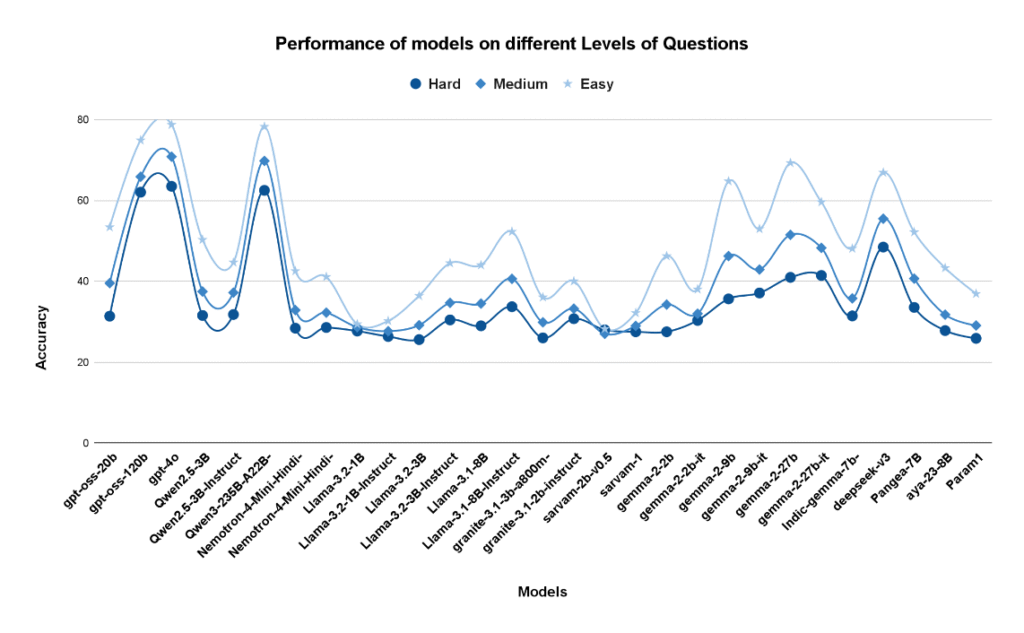

Performance by Question Level

Models show distinct differences in accuracy depending on the difficulty level of questions:

- Easy questions see the highest accuracy across the board, with top models like GPT-4o and Qwen3-235B achieving nearly 79% and 78% Most models perform significantly better on easy questions compared to harder ones.

- Medium-level questions pose a moderate challenge, with accuracies generally dropping by 10–20 percentage points from the easy category. Top models maintain scores in the 65–70%

Hard questions show the lowest performance across all models, often dropping below 40% even for the best models. This highlights challenges in handling complex, nuanced agricultural knowledge or reasoning.

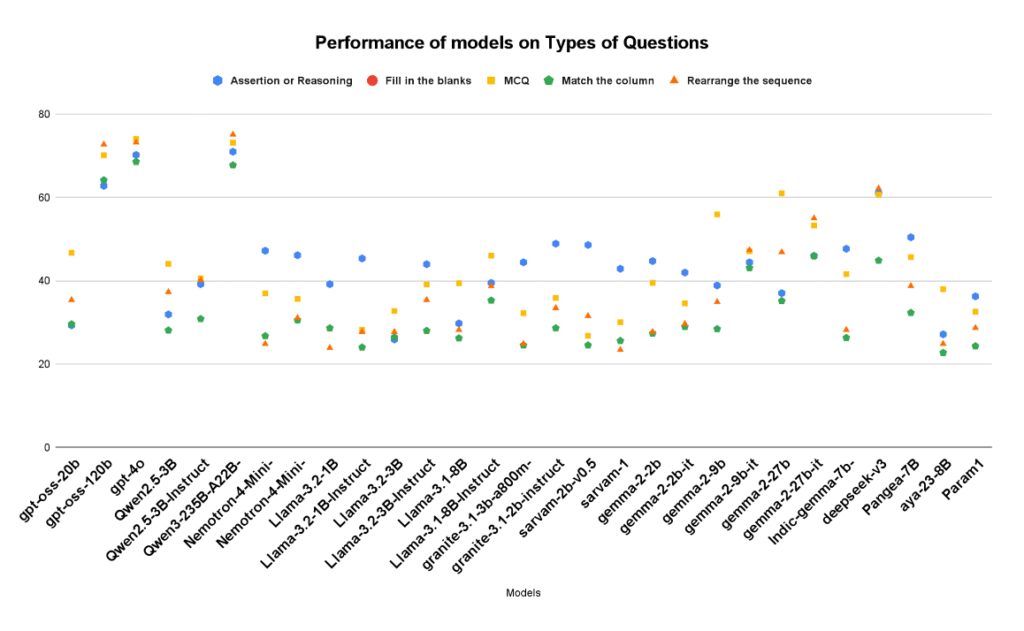

Performance by Question Type

Model accuracy also varies by question format, reflecting the nature of the cognitive skills required:

- Multiple Choice Questions (MCQ):

MCQs yield the highest accuracy overall. Top models consistently score above 70%, benefiting from clearly defined options and direct answers. - Rearrange the Sequence:

These questions test logical ordering and multi-step reasoning. Models perform well here, with top accuracies around 73–75%, slightly below MCQ performance. - Match the Column:

Matching tasks assess associative knowledge between pairs of concepts. Performance is somewhat lower, generally ranging from 60–68% in leading models. - Assertion or Reasoning:

This category requires deeper inference and justification, resulting in lower accuracy scores. Top models reach 70% but many fall significantly behind.

Fill in the Blanks:

Fill-in-the-blank questions are the most challenging format, with accuracies frequently below 60%, indicating difficulty in precise word or phrase generation without options

The Real-World Impact: Why This Matters

Agricultural AI Applications at Risk

The performance gaps revealed by BhashaBench-Krishi have immediate and significant implications for critical agricultural AI applications:

- Precision Agriculture Systems:

Current AI models struggle to reliably interpret soil test results in Indian contexts. Fertilizer recommendation systems lack nuanced understanding of local soil conditions, while crop monitoring tools often fail to account for diverse regional varieties and farming practices. - Agricultural Advisory Chatbots:

These systems frequently provide inaccurate or generic guidance on seasonal farming activities. They fall short in understanding government schemes, subsidy eligibility, and fail to adapt advice to specific agro-climatic zones. - Smart Farming Solutions:

AI-driven irrigation recommendations overlook local water management traditions. Pest management advice is often disconnected from regional pest biology. Market price predictions tend to ignore local economic and market dynamics, limiting their utility for farmers.

The Economic Stakes

Poor AI performance in agriculture risks huge economic and social consequences:

- India’s $400 billion agricultural sector is vulnerable to suboptimal AI deployment.

- 600 million farmers risk exclusion from AI-powered solutions that could enhance productivity and livelihoods.

- Food security for 4 billion people dependent on efficient agricultural systems is potentially jeopardized.

- The digital divide may widen further between technology-enabled large-scale farms and traditional smallholders.

Beyond Agriculture: Implications for Domain-Specific AI

The challenges highlighted by BhashaBench-Krishi extend to other domain-specific AI applications:

- Professional Knowledge Gaps:

Medical AI systems miss regional disease patterns. Legal AI often lacks jurisdiction-specific insights. Educational AI may be unaware of localized curricula. - Cultural Context Requirements:

Integration of traditional knowledge, recognition of regional practices, and understanding local institutions remain unmet needs across domains. - Language and Domain Intersection:

Technical terminology in local languages, cultural concepts without direct translations, and domain-specific communication patterns pose ongoing difficulties for AI systems.

Future Directions: Building Agricultural AI That Works

Immediate Research Priorities

- Data Collection Enhancements:

- Integrate regional state-level agricultural competitive exams.

- Expand practical, field-based problem scenarios.

- Document indigenous and traditional agricultural knowledge.

- Include real-time updates on current schemes, policies, and technologies.

- Integrate regional state-level agricultural competitive exams.

- Model Development Focus:

- Pre-train domain-specific agricultural language models.

- Build truly multilingual agricultural AI beyond just Hindi and English.

- Develop multimodal systems combining images, text, and sensor data.

- Create adaptive learning models that continuously update agricultural knowledge.

- Pre-train domain-specific agricultural language models.

Long-Term Vision for Agricultural AI

- Comprehensive Agricultural Intelligence:

AI systems that understand the full agricultural ecosystem, blending traditional wisdom with modern science, adapting in real time to changing conditions, and providing personalized, location-specific farming guidance. - Inclusive Agricultural Technology:

Tools accessible to smallholder farmers, supporting all major regional languages, culturally sensitive in recommendations, and economically viable for diverse farming operations.

Conclusion: Cultivating AI for India’s Agricultural Future

BhashaBench-Krishi is more than an evaluation framework — it is a clarion call to the AI community. While modern language models show impressive capabilities, they currently:

- Exhibit large blind spots in specialized agricultural knowledge.

- Demonstrate that scale alone does not guarantee professional competency.

- Struggle to incorporate cultural and regional context essential for real-world farming.

- Reveal a wider and more consequential AI readiness gap for agriculture than previously understood.

The Path to Agricultural AI Excellence

Creating AI that genuinely serves agriculture requires:

- Domain-First Development: Building agricultural expertise into AI systems from the ground up.

- Community Engagement: Active collaboration with farmers, agricultural scientists, and extension workers.

- Comprehensive Evaluation: Using benchmarks like BhashaBench-Krishi that test practical competency beyond academic knowledge.

- Sustained Investment: Committing to long-term research and development in agricultural AI.

A Call to Action

At the crossroads of AI innovation and agricultural transformation, BhashaBench-Krishi offers both challenge and opportunity:

- The challenge — to create AI sophisticated enough to manage the complexities of agricultural knowledge.

- The opportunity — to democratize access to agricultural expertise and revolutionize farming for millions.

The complete BhashaBench-Krishi dataset is publicly available on Hugging Face and fully integrated with LMeval for ongoing research and development. This benchmark embodies our shared commitment to ensuring AI’s agricultural revolution leaves no farmer behind.

For India’s agricultural future — and for the 600 million farmers whose knowledge and dedication feed our nation — we must demand better from AI systems. BhashaBench-Krishi lights the way forward.

Access our benchmark on Hugging Face: bharatgenai/BhashaBench-Krishi

Contact Details

For any questions or feedback, please contact:

- Vijay Devane (vijay.devane@tihiitb.org)

- Mohd. Nauman (mohd.nauman@tihiitb.org)

- Bhargav Patel (bhargav.patel@tihiitb.org)

- Kundeshwar Pundalik (kundeshwar.pundalik@tihiitb.org)